Google.ai: bringing the benefits of AI to everyone

When Google will notice that the starting towards a real and general AI is very basic: giving coordinates to the languge in a rude way at first: Heminemetics, maybe it will be late for them.

In any case,

Very powerful all tech adavances in AI (despite forgetting the basic common sense)

this new is from Google.ai

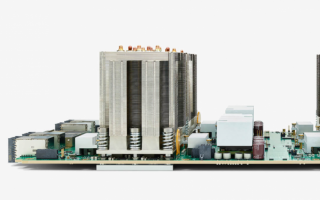

Google’s second-generation Tensor Processing Unit is coming to Cloud

Training state-of-the-art machine learning models requires an enormous amount of computation, and researchers, engineers, and data scientists often wait weeks for results. To solve this problem, we’ve designed an all-new ML accelerator from scratch — a second-generation TPU, or Tensor Processing Unit — that can accelerate both training and running ML models.

Each device delivers up to 180 teraflops of floating-point performance, and these new TPUs are designed to be connected into even larger systems. A 64-TPU pod can apply up to 11.5 petaflops of computation to a single ML training task.

We’re extremely excited about these new TPUs, and we want to share this technology with the world so that everyone can access their benefits. That’s why we’re bringing our second-generation TPUs to Google Cloud for the first time as Cloud TPUs on GCE, the Google Compute Engine. You’ll be able to mix-and-match Cloud TPUs with Skylake CPUs, NVIDIA GPUs, and all of the rest of our infrastructure and services to build and optimize the perfect machine learning system for your needs. Best of all, Cloud TPUs are easy to program via TensorFlow, the most popular open-source machine learning framework.

.

Many of the machine learning breakthroughs we’re witnessing today are being driven by an extremely open and collaborative international community of researchers. These aren’t only academics, either: people with many different kinds of backgrounds are making major contributions across a huge variety of domains.

One thing nearly all of these researchers have in common is their urgent need for more computing power, both to accelerate the work they are doing today and to enable them to explore bold new ideas that would be impractical without much more powerful hardware.

So to empower the most promising researchers to both pursue their dreams and share further breakthroughs with the world, we are creating the TensorFlow Research Cloud, a cluster of 1,000 Cloud TPUs that we will make available to top researchers for free. We are setting up a program to accept and evaluate applications for compute time on a rolling basis, and we’re excited to see what people will do with Cloud TPUs.